Implement Least Recently Used (LRU) cache

LRU Cache:

In computing, cache replacement algorithms are optimizing algorithms that a computer program or a hardware maintained structure can follow in order to manage a cache of information stored on the computer. When the cache is full, the algorithm must choose which items to discard to make room for the new ones.

Source: https://en.wikipedia.org/wiki/Cache_algorithms

Initially, when the cache has room for more pages, we keep on adding the pages to the cache. But when cache is completely filled, then to add a new page, another page has to be removed. So, a strategy needs to be used for replacing cache pages.

Least Recently Used cache replacement algorithm is a cache replacement strategy by which the least recently accessed page is removed from the cache when a new page is accessed which is not already present in the cache.

LRU Cache:

In computing, cache replacement algorithms are optimizing algorithms that a computer program or a hardware maintained structure can follow in order to manage a cache of information stored on the computer. When the cache is full, the algorithm must choose which items to discard to make room for the new ones.

Source: https://en.wikipedia.org/wiki/Cache_algorithms

Initially, when the cache has room for more pages, we keep on adding the pages to the cache. But when cache is completely filled, then to add a new page, another page has to be removed. So, a strategy needs to be used for replacing cache pages.

Least Recently Used cache replacement algorithm is a cache replacement strategy by which the least recently accessed page is removed from the cache when a new page is accessed which is not already present in the cache.

We use two data structures to implement an LRU Cache.

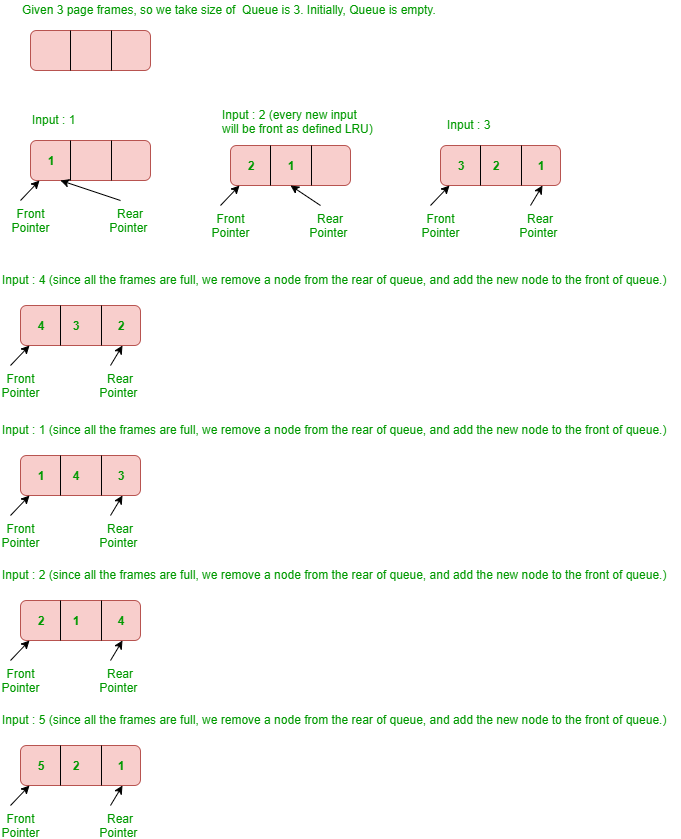

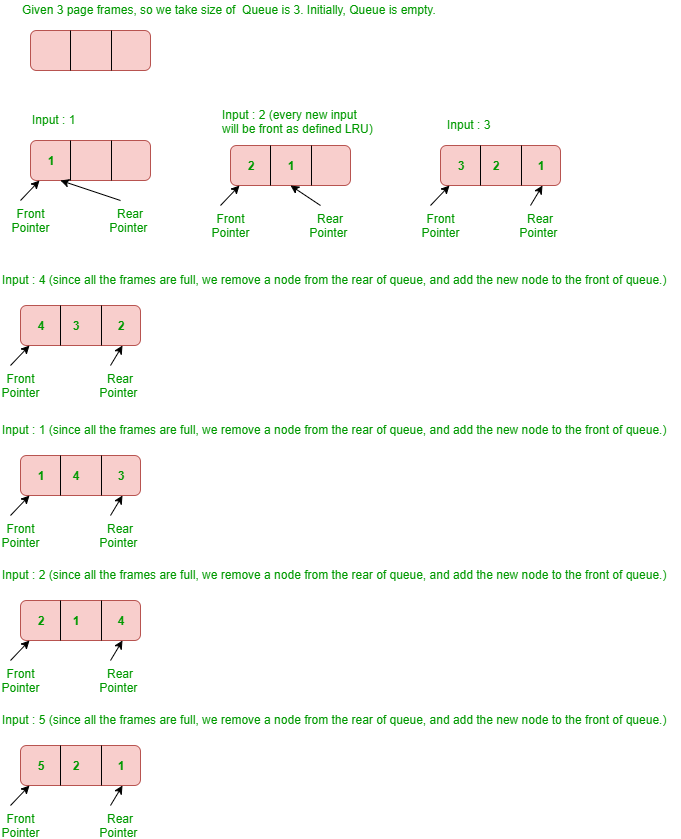

- Queue which is implemented using a doubly linked list. The maximum size of the queue will be equal to the total number of frames available (cache size).The most recently used pages will be near front end and least recently pages will be near rear end.

- A Hash with page number as key and address of the corresponding queue node as value.

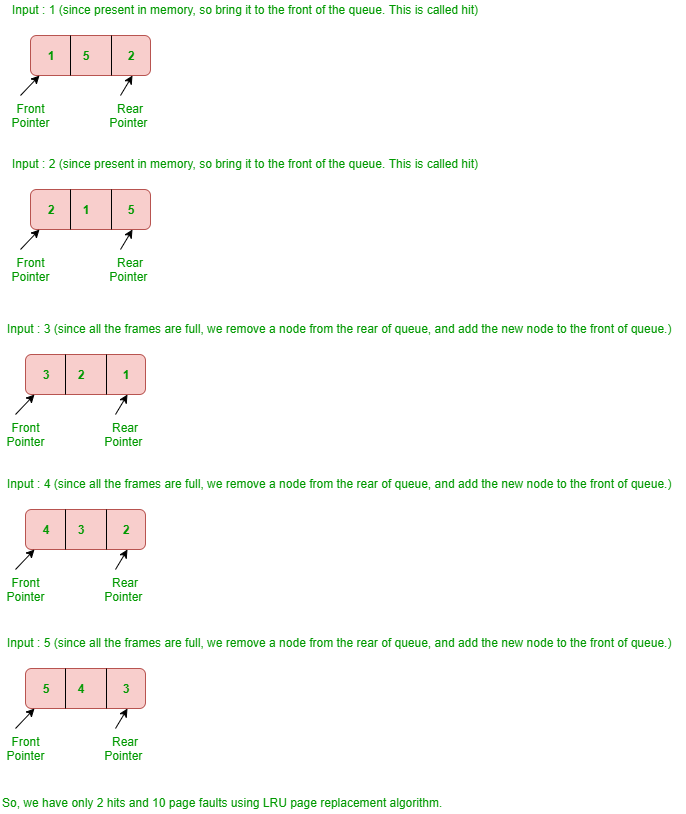

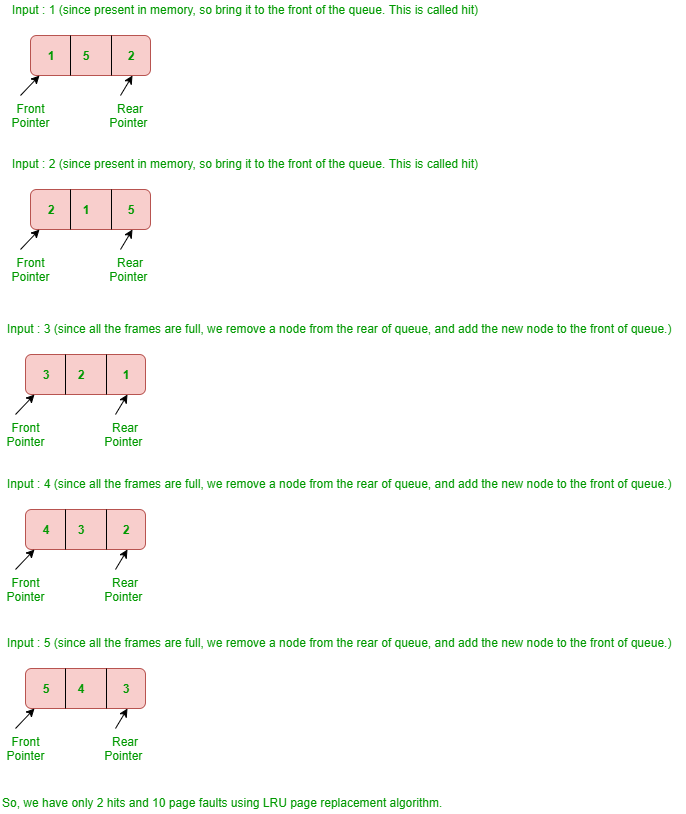

When a page is referenced, the required page may be in the memory. If it is in the memory, we need to detach the node of the list and bring it to the front of the queue.

If the required page is not in the memory, we bring that in memory. In simple words, we add a new node to the front of the queue and update the corresponding node address in the hash. If the queue is full, i.e. all the frames are full, we remove a node from the rear of queue, and add the new node to the front of queue.

If the required page is not in the memory, we bring that in memory. In simple words, we add a new node to the front of the queue and update the corresponding node address in the hash. If the queue is full, i.e. all the frames are full, we remove a node from the rear of queue, and add the new node to the front of queue.

Example – Consider the following reference string :

1, 2, 3, 4, 1, 2, 5, 1, 2, 3, 4, 5

Find the number of page faults using least recently used (LRU) page replacement algorithm with 3 page frames.

Explanation –

Explanation –

Note: Initially no page is in the memory.

Output:5 4 1 3

1 comment:

if u will give frame size as 4 and input values are 1 ,2, 3, 1, then it will give wrong output.check it!!!!

Post a Comment